Near-Data Processing

“Create Computer Systems Near Memories”

Near-data processing (NDP) is another alternative to address the expensive data movement problem of traditional compute-centric model. It refers to augmenting the memory or the storage with processing power. By placing computing capabilities directly on the memory or the storage, data is allowed to be processed in place, which significantly reduces data movement.

Moreover, the host resources can be free up, which can potentially be used for other activities running on the host. Since near-data processing has an advantage in terms of accelerating computation and saving the need for transferring data, it has a great potential in future computer architecture. In NDP research, we focus on application-specific scenarios that can maximize data reduction effect.

Nearst Neighbor

The nearest neighbor search that finds a certain number of closest points in a high dimensional space for a given query is a fundamental task in many domains, including machine learning, data analysis, and information retrieval. However, as the volume of data available increases exponentially in the era of big data, large-scale search often poses strong service-level agreement (SLA) requirements such as low latency, high bandwidth, and search relevance. To meet these requirements, cloud service providers need to employ an expensive compute cluster consisting of high-end CPUs with many DIMMs to store the entire database vectors in memory and perform the fast ANN search in a distributed manner. This scale-out approach is not only costly from the perspective of operating cost but also expensive due to the time and energy overhead required for moving a huge volume of data from storage to compute devices.

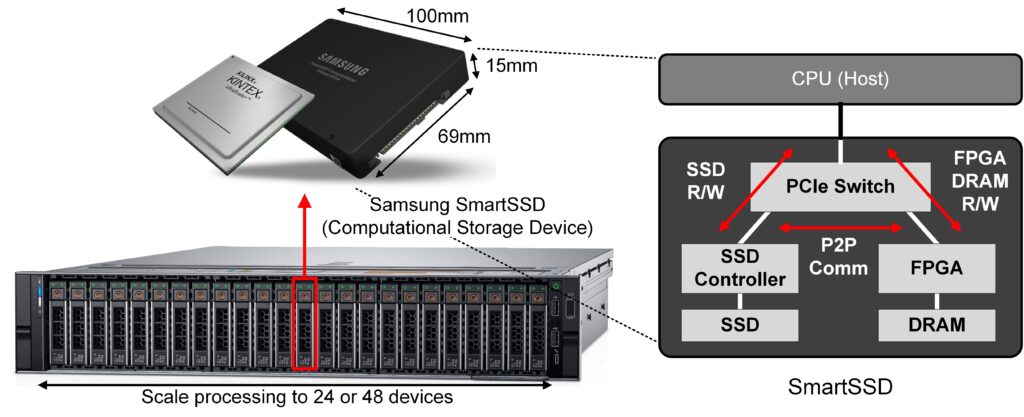

Therefore, a near-data processing can be a cost-effective and energy-efficient solution for accelerating a large-scale nearest neighbor search for cloud services. In particular, by constructing computational storage platform with computational storage device, the fast search with less power dissipation becomes possible compared to the conventional CPU and GPU-based server platform.